The real-time ray tracing code I wrote for PS3 Cell and CUDA GPGPU is being used by others. David Yuen's group at the Department of Geology and Geophysics and Minnesota Supercomputing Institute at the University of Minnesota used my PS3 code as the starting point for tsunami modeling. From their IEEE Vis 2007 poster session Use of Ray Tracing Techniques on Tsunami Simulation Data with the PlayStation® 3:

"This poster will discuss how we have used the PlayStation® 3’s cell processor to implement and employ parallelized visualization, through the form of ray tracing techniques, on tsunami data. By adapting Eric Rollins’ ray tracing package, we were able to implement his ray casting techniques on triangles. This allowed us to triangulate our tsunami datasets, stored as [x, y, height] data, and feed the triangulated data into the program. We rendered 4000 triangle objects from the data and applied a colormap based on each triangle’s height value. Finally, we displayed the generated image."

They also published a paper Experiments in scientific computation on the PlayStation 3 in the Springer journal Visual Geosciences. This PowerPoint presentation shows images from my and their work.

Other students are trying CUDA GPGPU ray tracing. Michael Allgyer (DOC) cited my work in his masters thesis proposal. Umut Erturk asked me about PS3 versus GPGPU for his thesis. Others are trying to get my CUDA code working on windows.

Monday, July 21, 2008

Friday, July 18, 2008

ACO for TSP with SML/NJ

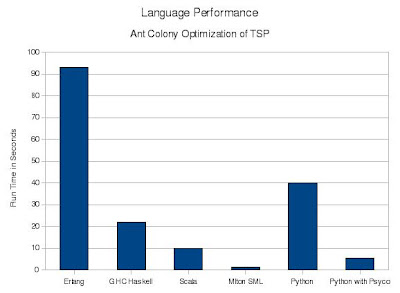

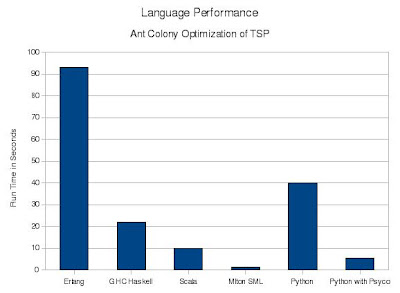

I was asked about the performance of SML/NJ on my usual ACO for TSP test. I used Standard ML of New Jersey v110.67 from Debian apt-get. I ran it in the interactive shell; no Compilation Manager. I didn't experiment with Concurrent ML (I don't think it supports multi-core). Results:

Results scaled up proportionally when I increased iterations by a factor of 10.

Time Multiple

in secs of C

C 0.4 1

MLton SML 1.6 4

SML/NJ 4.9 12

Alice SML 169.0 423

Results scaled up proportionally when I increased iterations by a factor of 10.

Tuesday, July 15, 2008

Shedskin Python

Shedskin is another experimental Python compiler. Previously I tested Psyco, a Just-in-Time Python compiler which operates while the program is running. Shedskin is a more traditional compiler, run in separate steps beforehand. Shedskin actually generates templated C++ code, which is then compiled with GCC.

Since Python is dynamically typed while C++ is statically typed, Shedskin performs type inference over your program. It generates C++ templates to support parametrically polymorphic functions. A simple example, previously used in my article Types and Programming Languages:

Results:

With shedskin, the following C++ template is generated:

and used:

While support for many Python constructs and library functions is currently limited, Shedskin was able to compile unmodified the same Ant-Colony-Optimization Python code I had used previously with Psyco Python. It is significantly faster, coming within 3x the performance of the baseline C version:

Since Python is dynamically typed while C++ is statically typed, Shedskin performs type inference over your program. It generates C++ templates to support parametrically polymorphic functions. A simple example, previously used in my article Types and Programming Languages:

def palindrome(x, y):

return (x, y, x)

print palindrome(1, 2)

print palindrome("a", "b")

Results:

(1, 2, 1)

('a', 'b', 'a')

With shedskin, the following C++ template is generated:

template <class A> tuple2<A, A> *palindrome(A x, A y) {

return (new tuple2<A, A>(3, x, y, x));

}

and used:

str *const_0, *const_1;

const_0 = new str("a");

const_1 = new str("b");

print("%s\n", palindrome(1, 2));

print("%s\n", palindrome(const_0, const_1));

While support for many Python constructs and library functions is currently limited, Shedskin was able to compile unmodified the same Ant-Colony-Optimization Python code I had used previously with Psyco Python. It is significantly faster, coming within 3x the performance of the baseline C version:

Time Multiple

in secs of C

C 0.4 1

Shedskin Python 1.2 3

Psyco Python 5.5 14

Python 40.0 100

Monday, July 14, 2008

C baseline for ACO TSP

In my previous post on the Multi-Core Problem I discussed comparing the performance of multi-core-friendly languages to the baseline performance of single-threaded C code. The test case I have been using is Ant Colony Optimization for TSP. In a recent post Leonardo Maffi has rewritten my ACO TSP code in C. This provides a baseline for comparisons:

gcc (GCC) 4.2.4 (Debian 4.2.4-1).

Compiled gcc -Wall -O3 -s ant_c.c -o ant_c

Still 1000 iterations, 200 cities.

Times for other languages from here .

Initial speedups from here (Scala interpolated), and do not model decay at higher numbers of cores.

Time Multiple Speedup

in secs of C (1->2)

C 0.4 1 n/a

Scala 10.0 25 1.7

Haskell 22.0 55 1.4

Erlang 93.0 233 1.9

gcc (GCC) 4.2.4 (Debian 4.2.4-1).

Compiled gcc -Wall -O3 -s ant_c.c -o ant_c

Still 1000 iterations, 200 cities.

Times for other languages from here .

Initial speedups from here (Scala interpolated), and do not model decay at higher numbers of cores.

Sunday, July 13, 2008

The Multi-Core Problem

"Since 2002, the limits of power, available instruction-level parallelism, and long memory latency have slowed uniprocessor performance [growth] recently, to about 20% per year [down from 52% per year]." Hennessy and Patterson, Computer Architecture: A Quantitative Approach, Fourth Edition (2007), p.3.

Post-2002 the Moore's Law growth in transistor count has been increasingly devoted to adding additional cores to CPUs, rather than trying to improve single-core performance. In the prior decade single-threaded C/C++ applications were sped up "for free" with successive CPU generations. This free ride has ended, and left programmers with a choice. Either they can rewrite their single-threaded C/C++ applications to be multi-threaded (either explicitly, with pthreads, or more implicitly, say with OpenMP), or they can rewrite their applications in a different more multi-core-friendly programming language.

In choosing an alternative language two important related factors must be examined. First, how much of a single-threaded performance hit is taken moving to the new language? If the new language is say 40 times slower than C/C++, you will need a 40-core CPU to get back to where you started (assuming perfect linear speedup). Second, how much worse than linear is the actual speedup? If the speedup starts out at 1.5 with 2 cores, and drops below 1 at 8 cores, the new language will probably never catch single-threaded C/C++.

Post-2002 the Moore's Law growth in transistor count has been increasingly devoted to adding additional cores to CPUs, rather than trying to improve single-core performance. In the prior decade single-threaded C/C++ applications were sped up "for free" with successive CPU generations. This free ride has ended, and left programmers with a choice. Either they can rewrite their single-threaded C/C++ applications to be multi-threaded (either explicitly, with pthreads, or more implicitly, say with OpenMP), or they can rewrite their applications in a different more multi-core-friendly programming language.

In choosing an alternative language two important related factors must be examined. First, how much of a single-threaded performance hit is taken moving to the new language? If the new language is say 40 times slower than C/C++, you will need a 40-core CPU to get back to where you started (assuming perfect linear speedup). Second, how much worse than linear is the actual speedup? If the speedup starts out at 1.5 with 2 cores, and drops below 1 at 8 cores, the new language will probably never catch single-threaded C/C++.

Wednesday, July 9, 2008

Ant Colony Optimization for TSP in Psyco Python

My last post about Parametric Polymorphism and runtime efficiency got me curious about Psyco Python, so I've implemented Ant Colony Optimization for TSP in Psyco Python.

Psyco is a compiler for Python. It is a just-in-time compiler, and uses the run-time type usage information to determine which functions should be native-compiled. It is a specializing compiler -- it will create multiple instantiations of the same function with different type parameters. It is very easy to use; just add 2 lines to the top of your program:

It is an experimental project. The documentation warns that map and lambda perform poorly, and that list comprehensions (often a reasonable substitute) should be used instead. The original developer has moved on to work on the PyPy project; I should investigate that later.

Compared to other tested languages the performance of uncompiled Python is average; with Psyco compilation it is surprisingly good.

All times in seconds.

Update 7/12

Leonardo Maffi has pointed out numerous potential style and performance improvements for the Python code. Turns out Python already has a zip function (hard to find the info searching www.python.org, mixed with the zip file functions :-), so I have removed my implementation from the code. No performance change with my zip removed.

Update 7/15

See new post on Shedskin Python.

Psyco is a compiler for Python. It is a just-in-time compiler, and uses the run-time type usage information to determine which functions should be native-compiled. It is a specializing compiler -- it will create multiple instantiations of the same function with different type parameters. It is very easy to use; just add 2 lines to the top of your program:

import psyco

psyco.full()

It is an experimental project. The documentation warns that map and lambda perform poorly, and that list comprehensions (often a reasonable substitute) should be used instead. The original developer has moved on to work on the PyPy project; I should investigate that later.

Compared to other tested languages the performance of uncompiled Python is average; with Psyco compilation it is surprisingly good.

Erlang Haskell Scala MLton_SML Python Psyco

93 22 10 1.5 40 5.5

All times in seconds.

Update 7/12

Leonardo Maffi has pointed out numerous potential style and performance improvements for the Python code. Turns out Python already has a zip function (hard to find the info searching www.python.org, mixed with the zip file functions :-), so I have removed my implementation from the code. No performance change with my zip removed.

Update 7/15

See new post on Shedskin Python.

Monday, July 7, 2008

Types and Programming Languages

"The first type systems in computer science, beginning in the 1950s in languages such as Fortran, were introduced to improve the efficiency of numerical calculations by distinguishing between integer-valued arithmetic expressions and real-valued ones; this allowed the compiler to use different representations and generate appropriate machine instructions for primitive operations."

I've been reading Pierce's Types and Programming Languages. I've become interested in the interactions between Parametric Polymorphism and runtime efficiency. I do have a interesting (to me :-) point at the end, but it takes a lot of introduction...

rest of my article here

I've been reading Pierce's Types and Programming Languages. I've become interested in the interactions between Parametric Polymorphism and runtime efficiency. I do have a interesting (to me :-) point at the end, but it takes a lot of introduction...

rest of my article here

Subscribe to:

Comments (Atom)